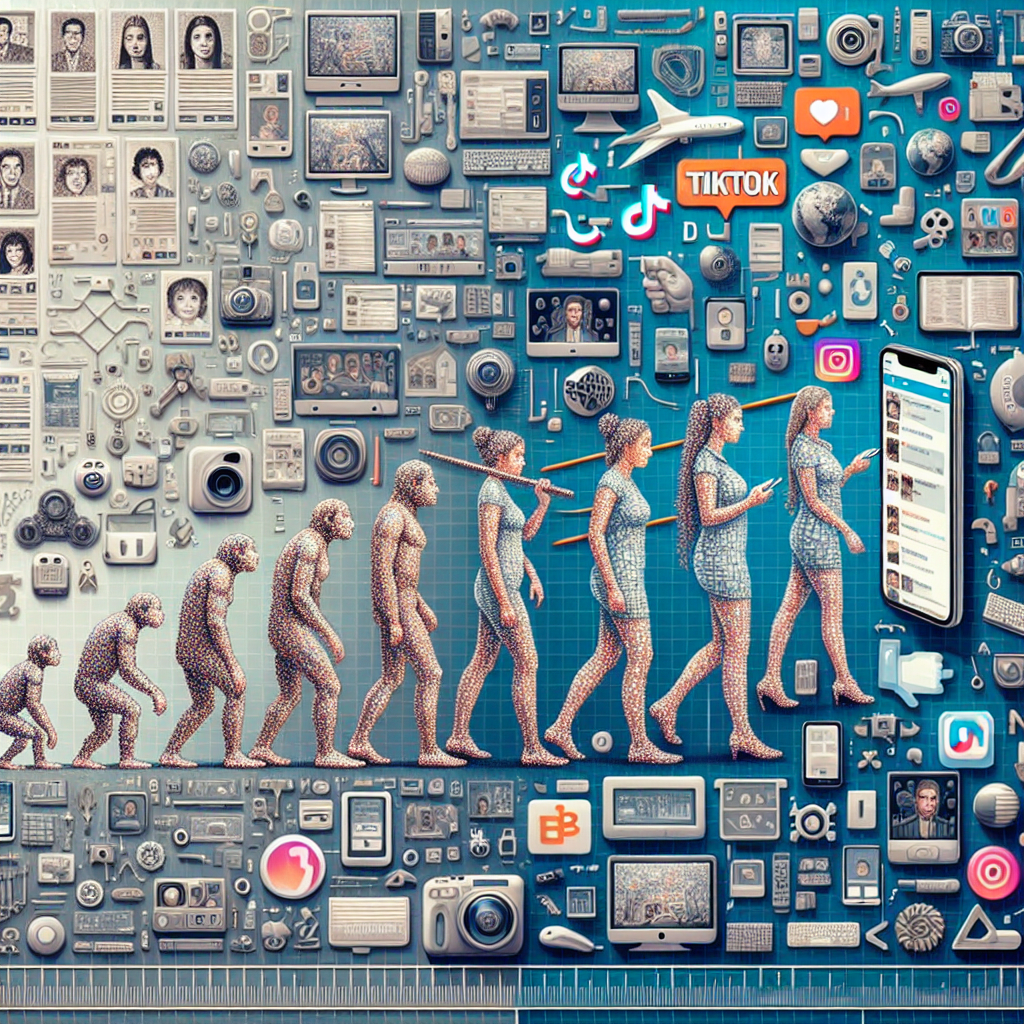

The explosive growth of deepfake technology has transformed the digital landscape, challenging our assumptions about the reliability of online content. As AI-driven tools become more accessible, the line between genuine footage and manipulated media blurs, prompting urgent debates around ethics, responsibility, and public safety. This article explores the technological foundations, societal impacts, and emerging solutions aimed at preserving authenticity in a world where images and videos can be fabricated with alarming realism.

Understanding the Rise of Deepfake Technology

Artificial intelligence researchers and hobbyists alike have leveraged generative adversarial networks (GANs) to produce videos that convincingly swap faces, mimic voices, and fabricate entire scenarios. Initially popularized in entertainment and satire, these tools now power a vast range of applications—from harmless meme creation to sophisticated political disinformation campaigns. On social media platforms, users encounter deepfakes in formats ranging from subtle face edits to full-length clips portraying figures saying or doing things they never did. While some creators push the boundaries of digital art, others exploit the same technology to undermine trust in public institutions or to harass private individuals. The rapid evolution of open-source libraries, cloud-based processing, and smartphone-friendly apps has made deepfake generation more intuitive than ever, raising questions about where creative freedom ends and malicious manipulation begins.

Technical Foundations

At the core of deepfake production are two neural networks—a generator that creates synthetic data and a discriminator that evaluates its realism. Iterative training enables the generator to refine outputs until the discriminator can no longer distinguish fakes from authentic samples. Advanced techniques such as attention mechanisms, style transfer, and audio-visual synchronization have further boosted fidelity, allowing for lip-syncing that matches original speech patterns and expressions. Even novices can combine pre-trained models with user-friendly interfaces to generate convincing content in minutes, removing traditional barriers to entry for complex video editing.

Popular Platforms and Trends

- Mobile applications offering one-click face swaps

- Online marketplaces selling custom deepfake services

- Social networks where users challenge each other with AI-generated pranks

- Video streaming sites hosting tutorials and code repositories

Ethical Challenges and the Threat to Trust

The proliferation of manipulated media threatens the very notion of authenticity on the internet. When videos can be fabricated effortlessly, audiences may grow suspicious of legitimate news broadcasts, personal messages, or corporate announcements. This erosion of credibility plays directly into the hands of those who spread misinformation to sow discord or influence public opinion. Furthermore, malicious actors can weaponize deepfakes to damage individual reputation—from non-consensual sexual content to staged scenarios that falsely incriminate or defame.

Political Manipulation

During election cycles, deepfakes can be deployed to create false statements by candidates or manipulate opponents. Such tactics undermine democratic processes, prompting calls for urgent safeguards. Even after a deceptive clip is debunked, initial impressions often persist, demonstrating how deepfakes can achieve strategic advantage simply through short-lived circulation.

Social Implications

Beyond politics, deepfakes fuel online harassment, bullying, and extortion. Victims struggle to clear their names, while platforms scramble to balance free expression against the need to protect users. In the absence of clear policies, enforcement remains decentralized, inconsistent, and often too slow to prevent harm.

Legal and Social Responses

Governments, tech companies, and advocacy groups have begun crafting frameworks designed to hold creators and distributors of malicious deepfakes to account. Legislative bodies worldwide debate bills introducing liability for platforms that fail to remove harmful content promptly, while law enforcement agencies develop forensic tools to trace deepfake origins and identify bad actors. Public awareness campaigns emphasize media literacy, encouraging users to question the source, context, and veracity of sensational clips.

Regulation and Accountability

Proposed regulations include mandatory watermarking for AI-generated media and penalties for non-consensual or deceptive uses. Tech firms are exploring terms-of-service updates that explicitly ban the upload of unauthorized manipulated content. By requiring transparency around the creation process, stakeholders hope to deter misuse and create a legal basis for remediation.

Technical Solutions

Innovations in detection aim to identify subtle inconsistencies in lighting, eye movement, or audio patterns. Watermarking algorithms embed invisible signatures in authentic videos, while blockchain-based provenance systems record every edit step, offering an immutable audit trail. Collaboration between academia and industry has yielded open challenges that push detection networks to higher levels of accuracy, though adversaries continuously refine methods to evade detection.

- Digital forensics tools analyzing pixel-level artifacts

- AI-based classifiers trained on vast libraries of real and fake samples

- Secure watermarking integrated into cameras and streaming services

Protecting Privacy and Ensuring Consent

The creation of a deepfake often involves the unauthorized reuse of an individual’s likeness, raising serious privacy concerns. In many cases, subjects are unaware that their images or voices have been harvested, resulting in potential emotional distress, legal violations, and long-term reputational damage. Advocates call for stricter controls on data collection, explicit consent requirements, and the right to request takedown or correction of manipulated media.

Impact on Individual Reputation

When false content spreads rapidly, victims may lose employment opportunities, face social ostracism, or even encounter direct threats. Once a deepfake surfaces, traditional remediation—such as legal action or public clarification—often fails to reach the same audience or recapture lost trust, leaving lasting scars.

Best Practices for Users

- Verify unfamiliar videos with reputable fact-checking sites

- Seek out original sources before sharing sensational clips

- Keep personal data and images secure to limit unauthorized access

- Use privacy settings on social platforms to control who can tag or download your content

Future Directions and the Role of Detection Mechanisms

Ongoing research focuses on resilient detection systems that adapt as generative models evolve. One promising avenue involves combining human expertise with automated screening: AI flags suspect content, and trained analysts conduct deeper verification. Education initiatives aim to foster a culture of healthy skepticism, teaching the public how to spot telltale signs of manipulation. Meanwhile, cross-industry partnerships are essential for sharing threat intelligence and refining best practices.

Collective Responsibility for a Safer Digital Environment

Ultimately, combating the risks associated with deepfakes requires a multi-stakeholder approach. Content creators, platform operators, policymakers, and everyday internet users each bear a share of the burden. By promoting transparency, investing in detection research, and strengthening legal frameworks, society can harness the creative potential of AI while safeguarding against its most dangerous abuses.